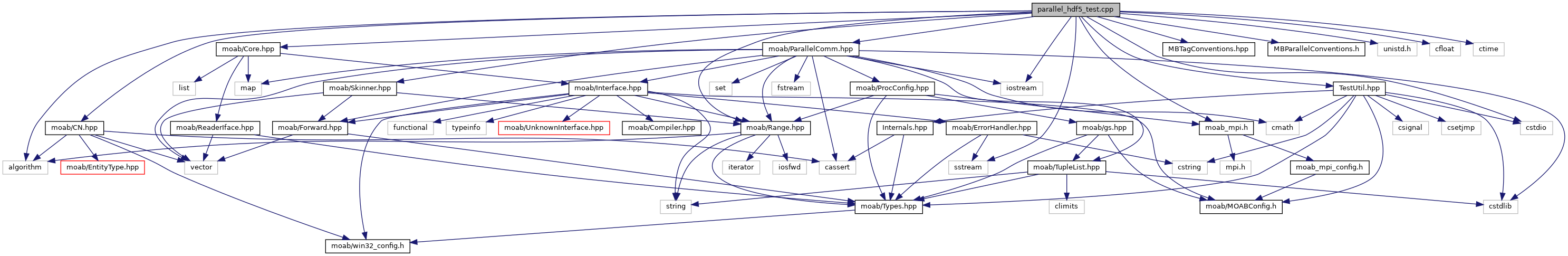

#include "moab/Range.hpp"#include "TestUtil.hpp"#include "moab/Core.hpp"#include "moab/ParallelComm.hpp"#include "moab/Skinner.hpp"#include "MBTagConventions.hpp"#include "moab/CN.hpp"#include "MBParallelConventions.h"#include <iostream>#include <sstream>#include <algorithm>#include "moab_mpi.h"#include <unistd.h>#include <cfloat>#include <cstdio>#include <ctime> Include dependency graph for parallel_hdf5_test.cpp:

Include dependency graph for parallel_hdf5_test.cpp:Go to the source code of this file.

Enumerations | |

| enum | Mode { READ_PART , READ_DELETE , BCAST_DELETE } |

Functions | |

| void | load_and_partition (Interface &moab, const char *filename, bool print_debug=false) |

| void | save_and_load_on_root (Interface &moab, const char *tmp_filename) |

| void | check_identical_mesh (Interface &moab1, Interface &moab2) |

| void | test_write_elements () |

| void | test_write_shared_sets () |

| void | test_var_length_parallel () |

| void | test_read_elements_common (bool by_rank, int intervals, bool print_time, const char *extra_opts=0) |

| void | test_read_elements () |

| void | test_read_elements_by_rank () |

| void | test_bcast_summary () |

| void | test_read_summary () |

| void | test_read_time () |

| void | test_read_tags () |

| void | test_read_global_tags () |

| void | test_read_sets_common (const char *extra_opts=0) |

| void | test_read_sets () |

| void | test_read_sets_bcast_dups () |

| void | test_read_sets_read_dups () |

| void | test_read_bc_sets () |

| void | test_write_different_element_types () |

| void | test_write_different_tags () |

| void | test_write_polygons () |

| void | test_write_unbalanced () |

| void | test_write_dense_tags () |

| void | test_read_non_adjs_side () |

| std::string | get_read_options (bool by_rank=DEFAULT_BY_RANK, Mode mode=DEFAULT_MODE, const char *extra_opts=0) |

| std::string | get_read_options (const char *extra_opts) |

| std::string | get_read_options (bool by_rank, const char *extra_opts) |

| int | main (int argc, char *argv[]) |

| void | print_partitioned_entities (Interface &moab, bool list_non_shared=false) |

| void | count_owned_entities (Interface &moab, int counts[MBENTITYSET]) |

| bool | check_sets_sizes (Interface &mb1, EntityHandle set1, Interface &mb2, EntityHandle set2) |

| void | create_input_file (const char *file_name, int intervals, int num_cpu, int blocks_per_cpu=1, const char *ijk_vert_tag_name=0, const char *ij_set_tag_name=0, const char *global_tag_name=0, const int *global_mesh_value=0, const int *global_default_value=0, bool create_bcsets=false) |

| Tag | get_tag (Interface &mb, int rank, bool create) |

Variables | |

| int | ReadIntervals = 0 |

| const char | PARTITION_TAG [] = "PARTITION" |

| bool | KeepTmpFiles = false |

| bool | PauseOnStart = false |

| bool | HyperslabAppend = false |

| const int | DefaultReadIntervals = 2 |

| int | ReadDebugLevel = 0 |

| int | WriteDebugLevel = 0 |

| int | ReadBlocks = 1 |

| const Mode | DEFAULT_MODE = READ_PART |

| const bool | DEFAULT_BY_RANK = false |

Enumeration Type Documentation

◆ Mode

| enum Mode |

| Enumerator | |

|---|---|

| READ_PART | |

| READ_DELETE | |

| BCAST_DELETE | |

Definition at line 95 of file parallel_hdf5_test.cpp.

Function Documentation

◆ check_identical_mesh()

Definition at line 490 of file parallel_hdf5_test.cpp.

References moab::Range::begin(), CHECK, CHECK_EQUAL, CHECK_ERR, moab::Range::clear(), moab::Range::end(), moab::Range::erase(), ErrorCode, moab::Interface::get_connectivity(), moab::Interface::get_coords(), moab::Interface::get_entities_by_type(), MBEDGE, MBENTITYSET, MBVERTEX, moab::Range::size(), and t.

Referenced by test_write_elements().

◆ check_sets_sizes()

| bool check_sets_sizes | ( | Interface & | mb1, |

| EntityHandle | set1, | ||

| Interface & | mb2, | ||

| EntityHandle | set2 | ||

| ) |

Definition at line 608 of file parallel_hdf5_test.cpp.

References CHECK_ERR, moab::CN::EntityTypeName(), ErrorCode, moab::Interface::get_number_entities_by_type(), MBMAXTYPE, MBVERTEX, and t.

Referenced by test_write_shared_sets().

◆ count_owned_entities()

| void count_owned_entities | ( | Interface & | moab, |

| int | counts[MBENTITYSET] | ||

| ) |

Definition at line 474 of file parallel_hdf5_test.cpp.

References CHECK, CHECK_ERR, moab::Range::empty(), ErrorCode, moab::ParallelComm::filter_pstatus(), moab::ParallelComm::get_pcomm(), MBENTITYSET, MBVERTEX, PSTATUS_NOT, PSTATUS_NOT_OWNED, moab::Range::size(), and t.

Referenced by test_write_elements().

◆ create_input_file()

| void create_input_file | ( | const char * | file_name, |

| int | intervals, | ||

| int | num_cpu, | ||

| int | blocks_per_cpu = 1, |

||

| const char * | ijk_vert_tag_name = 0, |

||

| const char * | ij_set_tag_name = 0, |

||

| const char * | global_tag_name = 0, |

||

| const int * | global_mesh_value = 0, |

||

| const int * | global_default_value = 0, |

||

| bool | create_bcsets = false |

||

| ) |

Definition at line 788 of file parallel_hdf5_test.cpp.

References moab::Core::add_entities(), CHECK_ERR, moab::Core::create_element(), moab::Core::create_meshset(), moab::Core::create_vertex(), DIRICHLET_SET_TAG_NAME, moab::dum, ErrorCode, moab::Skinner::find_skin(), moab::Core::get_adjacencies(), MATERIAL_SET_TAG_NAME, mb, MB_TAG_CREAT, MB_TAG_DENSE, MB_TAG_EXCL, MB_TAG_SPARSE, MB_TYPE_INTEGER, MBHEX, MESHSET_SET, NEUMANN_SET_TAG_NAME, PARTITION_TAG, moab::Core::tag_get_handle(), moab::Core::tag_set_data(), moab::Interface::UNION, and moab::Core::write_file().

Referenced by test_read_bc_sets(), test_read_elements_common(), test_read_global_tags(), test_read_sets_common(), test_read_tags(), and test_read_time().

◆ get_read_options() [1/3]

| std::string get_read_options | ( | bool | by_rank, |

| const char * | extra_opts | ||

| ) |

Definition at line 109 of file parallel_hdf5_test.cpp.

References DEFAULT_MODE, and get_read_options().

◆ get_read_options() [2/3]

| std::string get_read_options | ( | bool | by_rank = DEFAULT_BY_RANK, |

| Mode | mode = DEFAULT_MODE, |

||

| const char * | extra_opts = 0 |

||

| ) |

Definition at line 114 of file parallel_hdf5_test.cpp.

References BCAST_DELETE, HyperslabAppend, MPI_COMM_WORLD, PARTITION_TAG, READ_DELETE, READ_PART, and ReadDebugLevel.

Referenced by get_read_options(), test_read_bc_sets(), test_read_elements_common(), test_read_global_tags(), test_read_sets_common(), test_read_tags(), and test_read_time().

◆ get_read_options() [3/3]

| std::string get_read_options | ( | const char * | extra_opts | ) |

Definition at line 105 of file parallel_hdf5_test.cpp.

References DEFAULT_BY_RANK, DEFAULT_MODE, and get_read_options().

◆ get_tag()

Definition at line 1305 of file parallel_hdf5_test.cpp.

References CHECK_ERR, create(), ErrorCode, mb, MB_TAG_BIT, MB_TAG_DENSE, MB_TAG_EXCL, MB_TAG_SPARSE, MB_TAG_VARLEN, MB_TYPE_BIT, MB_TYPE_DOUBLE, MB_TYPE_HANDLE, MB_TYPE_INTEGER, MB_TYPE_OPAQUE, rank, moab::Core::tag_get_handle(), and TagType.

Referenced by test_write_different_tags().

◆ load_and_partition()

| void load_and_partition | ( | Interface & | moab, |

| const char * | filename, | ||

| bool | print_debug = false |

||

| ) |

Definition at line 412 of file parallel_hdf5_test.cpp.

References CHECK_ERR, ErrorCode, filename, and print_partitioned_entities().

Referenced by test_write_elements(), and test_write_shared_sets().

◆ main()

| int main | ( | int | argc, |

| char * | argv[] | ||

| ) |

Definition at line 149 of file parallel_hdf5_test.cpp.

References CHECK, DefaultReadIntervals, HyperslabAppend, KeepTmpFiles, MPI_COMM_WORLD, PauseOnStart, rank, ReadBlocks, ReadDebugLevel, ReadIntervals, RUN_TEST, test_read_bc_sets(), test_read_elements(), test_read_elements_by_rank(), test_read_global_tags(), test_read_non_adjs_side(), test_read_sets(), test_read_sets_bcast_dups(), test_read_sets_read_dups(), test_read_tags(), test_read_time(), test_var_length_parallel(), test_write_dense_tags(), test_write_different_element_types(), test_write_different_tags(), test_write_elements(), test_write_polygons(), test_write_shared_sets(), test_write_unbalanced(), and WriteDebugLevel.

◆ print_partitioned_entities()

| void print_partitioned_entities | ( | Interface & | moab, |

| bool | list_non_shared = false |

||

| ) |

Definition at line 261 of file parallel_hdf5_test.cpp.

References buffer, CHECK_ERR, children, moab::Range::clear(), dim, entities, ErrorCode, geom, GEOM_DIMENSION_TAG_NAME, geom_tag, id_tag, MAX_SHARING_PROCS, MB_TAG_NOT_FOUND, MB_TYPE_HANDLE, MB_TYPE_INTEGER, MB_TYPE_OPAQUE, MBENTITYSET, MPI_COMM_WORLD, PARALLEL_SHARED_HANDLE_TAG_NAME, PARALLEL_SHARED_HANDLES_TAG_NAME, PARALLEL_SHARED_PROC_TAG_NAME, PARALLEL_SHARED_PROCS_TAG_NAME, PARALLEL_STATUS_TAG_NAME, PSTATUS_NOT_OWNED, PSTATUS_SHARED, rank, size, moab::subtract(), and t.

Referenced by load_and_partition().

◆ save_and_load_on_root()

| void save_and_load_on_root | ( | Interface & | moab, |

| const char * | tmp_filename | ||

| ) |

Definition at line 427 of file parallel_hdf5_test.cpp.

References CHECK_ERR, ErrorCode, moab::ParallelComm::get_all_pcomm(), KeepTmpFiles, MB_SUCCESS, MPI_COMM_WORLD, and WriteDebugLevel.

Referenced by test_write_different_element_types(), test_write_different_tags(), test_write_elements(), test_write_polygons(), test_write_shared_sets(), and test_write_unbalanced().

◆ test_bcast_summary()

| void test_bcast_summary | ( | ) |

Definition at line 51 of file parallel_hdf5_test.cpp.

References ReadIntervals, and test_read_elements_common().

◆ test_read_bc_sets()

| void test_read_bc_sets | ( | ) |

Definition at line 1199 of file parallel_hdf5_test.cpp.

References moab::Range::begin(), CHECK_EQUAL, CHECK_ERR, moab::Range::clear(), create_input_file(), DefaultReadIntervals, DIRICHLET_SET_TAG_NAME, ErrorCode, moab::Core::get_entities_by_handle(), moab::Core::get_entities_by_type_and_tag(), get_read_options(), KeepTmpFiles, moab::Core::load_file(), MATERIAL_SET_TAG_NAME, mb, MB_TYPE_INTEGER, MBENTITYSET, MPI_COMM_WORLD, NEUMANN_SET_TAG_NAME, rank, moab::Range::size(), and moab::Core::tag_get_handle().

Referenced by main().

◆ test_read_elements()

| void test_read_elements | ( | ) |

Definition at line 43 of file parallel_hdf5_test.cpp.

References ReadIntervals, and test_read_elements_common().

Referenced by main().

◆ test_read_elements_by_rank()

| void test_read_elements_by_rank | ( | ) |

Definition at line 47 of file parallel_hdf5_test.cpp.

References ReadIntervals, and test_read_elements_common().

Referenced by main().

◆ test_read_elements_common()

| void test_read_elements_common | ( | bool | by_rank, |

| int | intervals, | ||

| bool | print_time, | ||

| const char * | extra_opts = 0 |

||

| ) |

Definition at line 929 of file parallel_hdf5_test.cpp.

References CHECK_EQUAL, CHECK_ERR, CHECK_REAL_EQUAL, create_input_file(), ErrorCode, moab::Range::front(), moab::Core::get_coords(), moab::Core::get_entities_by_type(), moab::Core::get_entities_by_type_and_tag(), moab::Core::get_number_entities_by_dimension(), get_read_options(), KeepTmpFiles, moab::Core::load_file(), mb, MB_TYPE_INTEGER, MBENTITYSET, MBVERTEX, MPI_COMM_WORLD, PARTITION_TAG, rank, moab::Range::size(), moab::Core::tag_get_data(), and moab::Core::tag_get_handle().

Referenced by test_bcast_summary(), test_read_elements(), test_read_elements_by_rank(), and test_read_summary().

◆ test_read_global_tags()

| void test_read_global_tags | ( | ) |

Definition at line 1103 of file parallel_hdf5_test.cpp.

References CHECK_EQUAL, CHECK_ERR, create_input_file(), ErrorCode, get_read_options(), KeepTmpFiles, moab::Core::load_file(), mb, MB_TAG_DENSE, MB_TYPE_INTEGER, MPI_COMM_WORLD, rank, moab::Core::tag_get_data(), moab::Core::tag_get_default_value(), moab::Core::tag_get_handle(), moab::Core::tag_get_type(), and TagType.

Referenced by main().

◆ test_read_non_adjs_side()

| void test_read_non_adjs_side | ( | ) |

Definition at line 1572 of file parallel_hdf5_test.cpp.

References CHECK, CHECK_ERR, ErrorCode, MPI_COMM_WORLD, rank, and size.

Referenced by main().

◆ test_read_sets()

| void test_read_sets | ( | ) |

Definition at line 64 of file parallel_hdf5_test.cpp.

References test_read_sets_common().

Referenced by main().

◆ test_read_sets_bcast_dups()

| void test_read_sets_bcast_dups | ( | ) |

Definition at line 68 of file parallel_hdf5_test.cpp.

References test_read_sets_common().

Referenced by main().

◆ test_read_sets_common()

| void test_read_sets_common | ( | const char * | extra_opts = 0 | ) |

Definition at line 1141 of file parallel_hdf5_test.cpp.

References moab::Range::all_of_type(), moab::Range::begin(), CHECK, CHECK_EQUAL, CHECK_ERR, CHECK_REAL_EQUAL, create_input_file(), DefaultReadIntervals, moab::Range::end(), ErrorCode, moab::Core::get_coords(), moab::Core::get_entities_by_handle(), moab::Core::get_entities_by_type_and_tag(), get_read_options(), KeepTmpFiles, moab::Core::load_file(), mb, MB_TAG_SPARSE, MB_TYPE_INTEGER, MBENTITYSET, MBVERTEX, MPI_COMM_WORLD, rank, moab::Range::size(), moab::Core::tag_get_data(), moab::Core::tag_get_handle(), moab::Core::tag_get_type(), and TagType.

Referenced by test_read_sets(), test_read_sets_bcast_dups(), and test_read_sets_read_dups().

◆ test_read_sets_read_dups()

| void test_read_sets_read_dups | ( | ) |

Definition at line 72 of file parallel_hdf5_test.cpp.

References test_read_sets_common().

Referenced by main().

◆ test_read_summary()

| void test_read_summary | ( | ) |

Definition at line 55 of file parallel_hdf5_test.cpp.

References ReadIntervals, and test_read_elements_common().

◆ test_read_tags()

| void test_read_tags | ( | ) |

Definition at line 1050 of file parallel_hdf5_test.cpp.

References moab::Range::begin(), CHECK, CHECK_EQUAL, CHECK_ERR, CHECK_REAL_EQUAL, create_input_file(), DefaultReadIntervals, moab::Range::end(), ErrorCode, moab::Core::get_coords(), moab::Core::get_entities_by_type(), moab::Core::get_entities_by_type_and_tag(), get_read_options(), KeepTmpFiles, moab::Core::load_file(), mb, MB_TAG_DENSE, MB_TYPE_INTEGER, MBVERTEX, MPI_COMM_WORLD, rank, moab::Core::tag_get_data(), moab::Core::tag_get_handle(), moab::Core::tag_get_type(), and TagType.

Referenced by main().

◆ test_read_time()

| void test_read_time | ( | ) |

Definition at line 983 of file parallel_hdf5_test.cpp.

References BCAST_DELETE, CHECK_ERR, create_input_file(), moab::Core::delete_mesh(), moab::Interface::delete_mesh(), ErrorCode, get_read_options(), KeepTmpFiles, moab::Core::load_file(), moab::Interface::load_file(), mb, MPI_COMM_WORLD, rank, READ_DELETE, READ_PART, ReadBlocks, and ReadIntervals.

Referenced by main().

◆ test_var_length_parallel()

| void test_var_length_parallel | ( | ) |

Definition at line 668 of file parallel_hdf5_test.cpp.

References moab::Range::begin(), CHECK, CHECK_EQUAL, CHECK_ERR, moab::Range::clear(), moab::Core::create_vertices(), moab::Range::end(), ErrorCode, filename, moab::Core::get_entities_by_type(), KeepTmpFiles, moab::Core::load_mesh(), mb, MB_SUCCESS, MB_TAG_DENSE, MB_TAG_EXCL, MB_TAG_VARLEN, MB_TYPE_INTEGER, MB_VARIABLE_DATA_LENGTH, MBVERTEX, MPI_COMM_WORLD, PARALLEL_GID_TAG_NAME, rank, size, moab::Core::tag_get_by_ptr(), moab::Core::tag_get_data_type(), moab::Core::tag_get_handle(), moab::Core::tag_get_length(), moab::Core::tag_get_type(), moab::Core::tag_set_by_ptr(), tagname, TagType, moab::Core::write_file(), and WriteDebugLevel.

Referenced by main().

◆ test_write_dense_tags()

| void test_write_dense_tags | ( | ) |

Definition at line 1514 of file parallel_hdf5_test.cpp.

References CHECK, CHECK_ERR, ErrorCode, moab::Core::load_file(), MB_TAG_CREAT, MB_TAG_DENSE, MB_TYPE_DOUBLE, MPI_COMM_WORLD, rank, size, moab::Range::size(), moab::Core::tag_get_handle(), moab::Core::tag_get_type(), tagname, and TagType.

Referenced by main().

◆ test_write_different_element_types()

| void test_write_different_element_types | ( | ) |

Definition at line 1257 of file parallel_hdf5_test.cpp.

References moab::Range::begin(), CHECK_EQUAL, CHECK_ERR, moab::Core::create_element(), moab::Core::create_vertex(), moab::Range::end(), ErrorCode, moab::Core::get_connectivity(), moab::Core::get_entities_by_type(), mb, MBEDGE, MBHEX, MBPRISM, MBPYRAMID, MBQUAD, MBTET, MBTRI, MPI_COMM_WORLD, rank, and save_and_load_on_root().

Referenced by main().

◆ test_write_different_tags()

| void test_write_different_tags | ( | ) |

Definition at line 1348 of file parallel_hdf5_test.cpp.

References get_tag(), mb, MPI_COMM_WORLD, rank, and save_and_load_on_root().

Referenced by main().

◆ test_write_elements()

| void test_write_elements | ( | ) |

Definition at line 551 of file parallel_hdf5_test.cpp.

References CHECK, CHECK_ERR, check_identical_mesh(), count_owned_entities(), moab::CN::EntityTypeName(), ErrorCode, load_and_partition(), moab::Core::load_file(), MBENTITYSET, MBVERTEX, MPI_COMM_WORLD, rank, save_and_load_on_root(), and t.

Referenced by main().

◆ test_write_polygons()

| void test_write_polygons | ( | ) |

Definition at line 1371 of file parallel_hdf5_test.cpp.

References moab::Range::begin(), CHECK_EQUAL, CHECK_ERR, moab::Core::create_element(), moab::Core::create_vertex(), moab::Range::end(), ErrorCode, moab::Core::get_connectivity(), moab::Core::get_coords(), moab::Core::get_entities_by_type(), mb, MBPOLYGON, MPI_COMM_WORLD, rank, save_and_load_on_root(), and moab::Range::size().

Referenced by main().

◆ test_write_shared_sets()

| void test_write_shared_sets | ( | ) |

Definition at line 627 of file parallel_hdf5_test.cpp.

References moab::Range::begin(), CHECK, CHECK_EQUAL, CHECK_ERR, check_sets_sizes(), moab::Range::end(), ErrorCode, moab::Range::front(), moab::Interface::get_entities_by_type_and_tag(), load_and_partition(), moab::Interface::load_file(), MATERIAL_SET_TAG_NAME, MB_TYPE_INTEGER, MBENTITYSET, MPI_COMM_WORLD, rank, save_and_load_on_root(), size, moab::Range::size(), moab::Interface::tag_get_data(), and moab::Interface::tag_get_handle().

Referenced by main().

◆ test_write_unbalanced()

| void test_write_unbalanced | ( | ) |

Definition at line 1437 of file parallel_hdf5_test.cpp.

References moab::Core::add_entities(), CHECK_EQUAL, CHECK_ERR, moab::Range::clear(), moab::Core::create_element(), moab::Core::create_meshset(), moab::Core::create_vertex(), entities, ErrorCode, moab::Range::front(), moab::Core::get_entities_by_handle(), moab::Core::get_entities_by_type(), moab::Core::get_entities_by_type_and_tag(), moab::ParallelComm::get_pcomm(), GLOBAL_ID_TAG_NAME, moab::Core::globalId_tag(), moab::Range::insert(), mb, MB_TYPE_INTEGER, MBENTITYSET, MBQUAD, MBVERTEX, MESHSET_SET, MPI_COMM_WORLD, rank, moab::ParallelComm::resolve_shared_ents(), moab::ParallelComm::resolve_shared_sets(), save_and_load_on_root(), moab::Range::size(), moab::Core::tag_get_handle(), and moab::Core::tag_set_data().

Referenced by main().

Variable Documentation

◆ DEFAULT_BY_RANK

| const bool DEFAULT_BY_RANK = false |

Definition at line 102 of file parallel_hdf5_test.cpp.

Referenced by get_read_options().

◆ DEFAULT_MODE

Definition at line 101 of file parallel_hdf5_test.cpp.

Referenced by get_read_options().

◆ DefaultReadIntervals

| const int DefaultReadIntervals = 2 |

Definition at line 90 of file parallel_hdf5_test.cpp.

Referenced by main(), test_read_bc_sets(), test_read_sets_common(), and test_read_tags().

◆ HyperslabAppend

| bool HyperslabAppend = false |

Definition at line 89 of file parallel_hdf5_test.cpp.

Referenced by get_read_options(), and main().

◆ KeepTmpFiles

| bool KeepTmpFiles = false |

Definition at line 87 of file parallel_hdf5_test.cpp.

Referenced by main(), save_and_load_on_root(), test_read_bc_sets(), test_read_elements_common(), test_read_global_tags(), test_read_sets_common(), test_read_tags(), test_read_time(), and test_var_length_parallel().

◆ PARTITION_TAG

| const char PARTITION_TAG[] = "PARTITION" |

Definition at line 85 of file parallel_hdf5_test.cpp.

Referenced by create_input_file(), get_read_options(), and test_read_elements_common().

◆ PauseOnStart

| bool PauseOnStart = false |

Definition at line 88 of file parallel_hdf5_test.cpp.

Referenced by main().

◆ ReadBlocks

| int ReadBlocks = 1 |

Definition at line 93 of file parallel_hdf5_test.cpp.

Referenced by main(), and test_read_time().

◆ ReadDebugLevel

| int ReadDebugLevel = 0 |

Definition at line 91 of file parallel_hdf5_test.cpp.

Referenced by get_read_options(), and main().

◆ ReadIntervals

| int ReadIntervals = 0 |

Definition at line 42 of file parallel_hdf5_test.cpp.

Referenced by main(), test_bcast_summary(), test_read_elements(), test_read_elements_by_rank(), test_read_summary(), and test_read_time().

◆ WriteDebugLevel

| int WriteDebugLevel = 0 |

Definition at line 92 of file parallel_hdf5_test.cpp.

Referenced by main(), save_and_load_on_root(), and test_var_length_parallel().