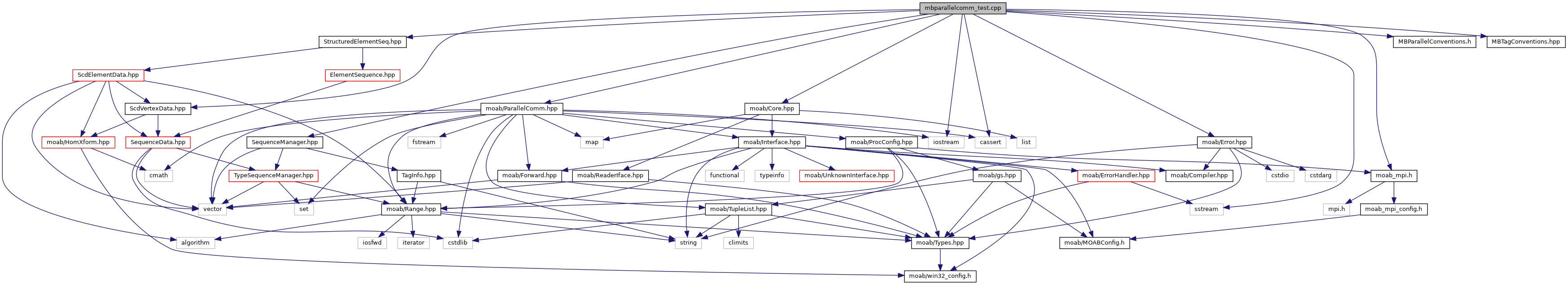

#include "moab/ParallelComm.hpp"#include "MBParallelConventions.h"#include "MBTagConventions.hpp"#include "moab/Core.hpp"#include "ScdVertexData.hpp"#include "StructuredElementSeq.hpp"#include "SequenceManager.hpp"#include "moab/Error.hpp"#include "moab_mpi.h"#include <iostream>#include <sstream>#include <cassert> Include dependency graph for mbparallelcomm_test.cpp:

Include dependency graph for mbparallelcomm_test.cpp:Go to the source code of this file.

Macros | |

| #define | REALTFI 1 |

| #define | ERROR(a, b) |

| #define | PRINT_LAST_ERROR |

| #define | RRA(a) |

| #define | PRINTSETS(a, b, c, p) |

Functions | |

| ErrorCode | create_linear_mesh (Interface *mbImpl, int N, int M, int &nshared) |

| ErrorCode | create_scd_mesh (Interface *mbImpl, int IJK, int &nshared) |

| ErrorCode | read_file (Interface *mbImpl, std::vector< std::string > &filenames, const char *tag_name, int tag_val, int distrib, int parallel_option, int resolve_shared, int with_ghosts, int use_mpio, bool print_parallel) |

| ErrorCode | test_packing (Interface *mbImpl, const char *filename) |

| ErrorCode | report_nsets (Interface *mbImpl) |

| ErrorCode | report_iface_ents (Interface *mbImpl, std::vector< ParallelComm * > &pcs) |

| void | print_usage (const char *) |

| int | main (int argc, char **argv) |

Variables | |

| const bool | debug = false |

Macro Definition Documentation

◆ ERROR

| #define ERROR | ( | a, | |

| b | |||

| ) |

Definition at line 28 of file mbparallelcomm_test.cpp.

◆ PRINT_LAST_ERROR

| #define PRINT_LAST_ERROR |

Definition at line 34 of file mbparallelcomm_test.cpp.

◆ PRINTSETS

| #define PRINTSETS | ( | a, | |

| b, | |||

| c, | |||

| p | |||

| ) |

◆ REALTFI

| #define REALTFI 1 |

test of ParallelComm functionality

To run:

mpirun -np <#procs> mbparallelcomm_test

Definition at line 22 of file mbparallelcomm_test.cpp.

◆ RRA

| #define RRA | ( | a | ) |

Definition at line 43 of file mbparallelcomm_test.cpp.

Function Documentation

◆ create_linear_mesh()

◆ create_scd_mesh()

◆ main()

| int main | ( | int | argc, |

| char ** | argv | ||

| ) |

Definition at line 73 of file mbparallelcomm_test.cpp.

References moab::Interface::delete_mesh(), ErrorCode, MB_SUCCESS, MPI_COMM_WORLD, PRINT_LAST_ERROR, print_usage(), rank, read_file(), and test_packing().

◆ print_usage()

| void print_usage | ( | const char * | command | ) |

Definition at line 222 of file mbparallelcomm_test.cpp.

Referenced by main().

◆ read_file()

| ErrorCode read_file | ( | Interface * | mbImpl, |

| std::vector< std::string > & | filenames, | ||

| const char * | tag_name, | ||

| int | tag_val, | ||

| int | distrib, | ||

| int | parallel_option, | ||

| int | resolve_shared, | ||

| int | with_ghosts, | ||

| int | use_mpio, | ||

| bool | print_parallel | ||

| ) |

Definition at line 331 of file mbparallelcomm_test.cpp.

References ErrorCode, moab::ParallelComm::get_pcomm(), moab::Interface::load_file(), MB_SUCCESS, MPI_COMM_WORLD, PRINT_LAST_ERROR, report_iface_ents(), and RRA.

Referenced by main().

◆ report_iface_ents()

| ErrorCode report_iface_ents | ( | Interface * | mbImpl, |

| std::vector< ParallelComm * > & | pcs | ||

| ) |

Definition at line 461 of file mbparallelcomm_test.cpp.

References ErrorCode, moab::Interface::get_adjacencies(), moab::Interface::get_entities_by_dimension(), moab::Interface::get_last_error(), moab::Interface::get_number_entities_by_dimension(), MB_SUCCESS, moab::Range::merge(), MPI_COMM_WORLD, PSTATUS_NOT_OWNED, rank, size, moab::Range::size(), and moab::Interface::UNION.

Referenced by read_file().

◆ report_nsets()

Definition at line 238 of file mbparallelcomm_test.cpp.

References moab::Range::clear(), moab::debug, ErrorCode, moab::Interface::get_entities_by_type(), moab::Interface::get_number_entities_by_type(), moab::Interface::list_entities(), MB_SUCCESS, MB_TYPE_INTEGER, MBENTITYSET, MPI_COMM_WORLD, moab::Range::print(), PRINTSETS, rank, moab::Range::size(), and moab::Interface::tag_get_handle().

◆ test_packing()

Definition at line 420 of file mbparallelcomm_test.cpp.

References moab::ParallelComm::Buffer::buff_ptr, moab::Interface::create_meshset(), ErrorCode, filename, moab::Interface::get_entities_by_handle(), moab::Range::insert(), moab::Interface::load_file(), MB_SUCCESS, MESHSET_SET, MPI_COMM_WORLD, moab::ParallelComm::pack_buffer(), PRINT_LAST_ERROR, moab::ParallelComm::Buffer::reset_ptr(), RRA, and moab::ParallelComm::unpack_buffer().

Referenced by main().

Variable Documentation

◆ debug

| const bool debug = false |

Definition at line 24 of file mbparallelcomm_test.cpp.