41 std::string

readopts(

"PARALLEL=READ_PART;PARTITION=PARALLEL_PARTITION;PARALLEL_RESOLVE_SHARED_ENTS" );

48 MPI_Init( &argc, &argv );

54 std::string

atmFilename = TestDir +

"unittest/srcWithSolnTag.h5m";

70 std::string ocnFilename = TestDir +

"unittest/outTri15_8.h5m";

71 std::string mapFilename = TestDir +

"unittest/mapNE20_FV15.nc";

73 std::string baseline = TestDir +

"unittest/baseline2.txt";

74 int rankInOcnComm = -1;

75 int cmpocn = 17, cplocn = 18,

78 int rankInCouComm = -1;

92 opts.

addOpt< std::string >(

"atmosphere,t",

"atm mesh filename (source)", &

atmFilename );

93 opts.

addOpt< std::string >(

"ocean,m",

"ocean mesh filename (target)", &ocnFilename );

94 opts.

addOpt< std::string >(

"map_file,w",

"map file from source to target", &mapFilename );

96 opts.

addOpt<

int >(

"startAtm,a",

"start task for atmosphere layout", &

startG1 );

97 opts.

addOpt<

int >(

"endAtm,b",

"end task for atmosphere layout", &

endG1 );

99 opts.

addOpt<

int >(

"startOcn,c",

"start task for ocean layout", &

startG2 );

100 opts.

addOpt<

int >(

"endOcn,d",

"end task for ocean layout", &

endG2 );

102 opts.

addOpt<

int >(

"startCoupler,g",

"start task for coupler layout", &startG4 );

103 opts.

addOpt<

int >(

"endCoupler,j",

"end task for coupler layout", &endG4 );

105 int types[2] = { 3, 3 };

106 int disc_orders[2] = { 1, 1 };

107 opts.

addOpt<

int >(

"typeSource,x",

"source type", &types[0] );

108 opts.

addOpt<

int >(

"typeTarget,y",

"target type", &types[1] );

109 opts.

addOpt<

int >(

"orderSource,u",

"source order", &disc_orders[0] );

110 opts.

addOpt<

int >(

"orderTarget,v",

"target oorder", &disc_orders[1] );

111 bool analytic_field =

false;

112 opts.

addOpt<

void >(

"analytic,q",

"analytic field", &analytic_field );

114 bool no_regression_test =

false;

115 opts.

addOpt<

void >(

"no_regression,r",

"do not do regression test against baseline 1", &no_regression_test );

123 <<

"\n ocn file: " << ocnFilename <<

"\n on tasks : " <<

startG2 <<

":" <<

endG2

124 <<

"\n map file:" << mapFilename <<

"\n on tasks : " << startG4 <<

":" << endG4 <<

"\n";

125 if( !no_regression_test )

127 std::cout <<

" check projection against baseline: " << baseline <<

"\n";

136 MPI_Group atmPEGroup;

139 CHECKIERR(

ierr,

"Cannot create atm MPI group and communicator " )

141 MPI_Group ocnPEGroup;

147 MPI_Group couPEGroup;

153 MPI_Group joinAtmCouGroup;

159 MPI_Group joinOcnCouGroup;

167 int cmpAtmAppID = -1;

169 int cplAtmAppID = -1;

172 int cmpOcnAppID = -1;

174 int cplOcnAppID = -1, cplAtmOcnAppID = -1;

178 if( couComm != MPI_COMM_NULL )

180 MPI_Comm_rank( couComm, &rankInCouComm );

191 if( atmComm != MPI_COMM_NULL )

201 if( ocnComm != MPI_COMM_NULL )

203 MPI_Comm_rank( ocnComm, &rankInOcnComm );

212 if( couComm != MPI_COMM_NULL )

216 CHECKIERR(

ierr,

"Cannot register ocn_atm map instance over coupler pes " )

219 const std::string intx_from_file_identifier =

"map-from-file";

221 if( couComm != MPI_COMM_NULL )

224 int dummy_rowcol = -1;

226 ierr = iMOAB_LoadMappingWeightsFromFile( cplAtmOcnPID, &dummyCpl, &dummy_rowcol, &dummyType,

227 intx_from_file_identifier.c_str(), mapFilename.c_str() );

231 if( atmCouComm != MPI_COMM_NULL )

236 ierr = iMOAB_MigrateMapMesh( cmpAtmPID, cplAtmOcnPID, cplAtmPID, &atmCouComm, &atmPEGroup, &couPEGroup, &type,

237 &

cmpatm, &cplocn, &direction );

238 CHECKIERR(

ierr,

"failed to migrate mesh for atm on coupler" );

240 if( *cplAtmPID >= 0 )

242 char prefix[] =

"atmcov";

250 if( ocnCouComm != MPI_COMM_NULL )

255 ierr = iMOAB_MigrateMapMesh( cmpOcnPID, cplAtmOcnPID, cplOcnPID, &ocnCouComm, &ocnPEGroup, &couPEGroup, &type,

256 &cmpocn, &cplocn, &direction );

257 CHECKIERR(

ierr,

"failed to migrate mesh for ocn on coupler" );

260 if( *cplOcnPID >= 0 )

262 char prefix[] =

"ocntgt";

265 char outputFileRec[] =

"CoupOcn.h5m";

275 int atmCompNDoFs = disc_orders[0] * disc_orders[0], ocnCompNDoFs = disc_orders[1] * disc_orders[1] ;

277 const char* bottomTempField =

"AnalyticalSolnSrcExact";

278 const char* bottomTempProjectedField =

"Target_proj";

280 if( couComm != MPI_COMM_NULL )

283 CHECKIERR(

ierr,

"failed to define the field tag AnalyticalSolnSrcExact" );

286 CHECKIERR(

ierr,

"failed to define the field tag Target_proj" );

289 if( analytic_field && ( atmComm != MPI_COMM_NULL ) )

293 CHECKIERR(

ierr,

"failed to define the field tag AnalyticalSolnSrcExact" );

295 int nverts[3], nelem[3], nblocks[3], nsbc[3], ndbc[3];

304 int numAllElem = nelem[2];

309 numAllElem = nverts[2];

312 std::vector< double > vals;

313 int storLeng = atmCompNDoFs * numAllElem;

314 vals.resize( storLeng );

315 for(

int k = 0; k < storLeng; k++ )

330 if( cplAtmAppID >= 0 )

332 int nverts[3], nelem[3], nblocks[3], nsbc[3], ndbc[3];

342 int numAllElem = nelem[2];

347 numAllElem = nverts[2];

349 std::vector< double > vals;

350 int storLeng = atmCompNDoFs * numAllElem;

352 vals.resize( storLeng );

353 for(

int k = 0; k < storLeng; k++ )

363 const char* concat_fieldname =

"AnalyticalSolnSrcExact";

364 const char* concat_fieldnameT =

"Target_proj";

368 PUSH_TIMER(

"Send/receive data from atm component to coupler in ocn context" )

369 if( atmComm != MPI_COMM_NULL )

373 ierr = iMOAB_SendElementTag( cmpAtmPID,

"AnalyticalSolnSrcExact", &atmCouComm, &cplocn );

376 if( couComm != MPI_COMM_NULL )

379 ierr = iMOAB_ReceiveElementTag( cplAtmPID,

"AnalyticalSolnSrcExact", &atmCouComm, &

cmpatm );

384 if( atmComm != MPI_COMM_NULL )

386 ierr = iMOAB_FreeSenderBuffers( cmpAtmPID, &cplocn );

387 CHECKIERR(

ierr,

"cannot free buffers used to resend atm tag towards the coverage mesh" )

391 if( *cplAtmPID >= 0 )

393 char prefix[] =

"atmcov_withdata";

395 CHECKIERR(

ierr,

"failed to write local atm cov mesh with data" );

399 if( couComm != MPI_COMM_NULL )

403 PUSH_TIMER(

"Apply Scalar projection weights" )

404 ierr = iMOAB_ApplyScalarProjectionWeights( cplAtmOcnPID, intx_from_file_identifier.c_str(),

405 concat_fieldname, concat_fieldnameT );

406 CHECKIERR(

ierr, "failed to compute projection weight application" );

410 char outputFileTgt[] =

"fOcnOnCpl6.h5m";

417 if( ocnComm != MPI_COMM_NULL )

422 CHECKIERR(

ierr,

"failed to define the field tag for receiving back the tag "

423 "Target_proj on ocn pes" );

429 if( couComm != MPI_COMM_NULL )

433 ierr = iMOAB_SendElementTag( cplOcnPID,

"Target_proj", &ocnCouComm, &context_id );

434 CHECKIERR(

ierr,

"cannot send tag values back to ocean pes" )

438 if( ocnComm != MPI_COMM_NULL )

441 ierr = iMOAB_ReceiveElementTag( cmpOcnPID,

"Target_proj", &ocnCouComm, &context_id );

442 CHECKIERR(

ierr,

"cannot receive tag values from ocean mesh on coupler pes" )

445 if( couComm != MPI_COMM_NULL )

448 ierr = iMOAB_FreeSenderBuffers( cplOcnPID, &context_id );

452 if( ocnComm != MPI_COMM_NULL )

455 char outputFileOcn[] =

"OcnWithProj.h5m";

460 if( !no_regression_test )

465 int nverts[3], nelem[3];

468 std::vector< int > gidElems;

469 gidElems.resize( nelem[2] );

470 std::vector< double > tempElems;

471 tempElems.resize( nelem[2] );

473 const std::string GidStr =

"GLOBAL_ID";

485 check_baseline_file( baseline, gidElems, tempElems, 1.e-9, err_code );

487 std::cout <<

" passed baseline test atm2ocn on ocean task " << rankInOcnComm <<

"\n";

493 if( couComm != MPI_COMM_NULL )

498 if( ocnComm != MPI_COMM_NULL )

504 if( atmComm != MPI_COMM_NULL )

510 if( couComm != MPI_COMM_NULL )

516 if( couComm != MPI_COMM_NULL )

527 if( MPI_COMM_NULL != atmCouComm ) MPI_Comm_free( &atmCouComm );

528 MPI_Group_free( &joinAtmCouGroup );

529 if( MPI_COMM_NULL != atmComm ) MPI_Comm_free( &atmComm );

531 if( MPI_COMM_NULL != ocnComm ) MPI_Comm_free( &ocnComm );

533 if( MPI_COMM_NULL != ocnCouComm ) MPI_Comm_free( &ocnCouComm );

534 MPI_Group_free( &joinOcnCouGroup );

536 if( MPI_COMM_NULL != couComm ) MPI_Comm_free( &couComm );

538 MPI_Group_free( &atmPEGroup );

540 MPI_Group_free( &ocnPEGroup );

542 MPI_Group_free( &couPEGroup );

543 MPI_Group_free( &

jgroup );

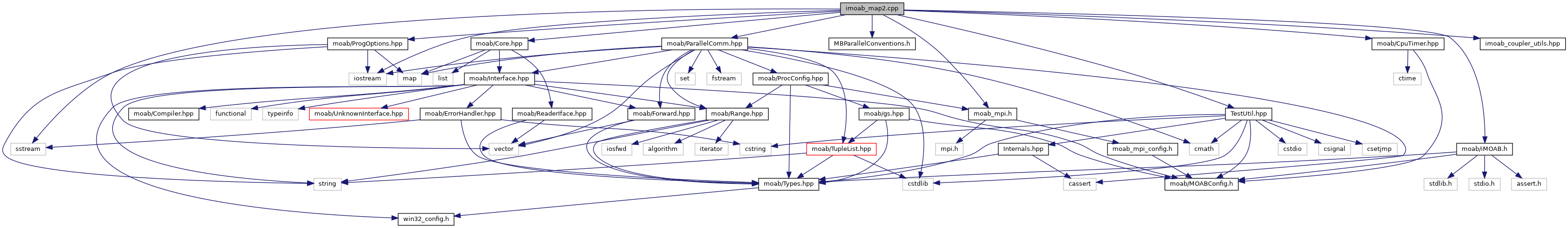

Include dependency graph for imoab_map2.cpp:

Include dependency graph for imoab_map2.cpp: