53 std::string

readopts(

"PARALLEL=READ_PART;PARTITION=PARALLEL_PARTITION;PARALLEL_RESOLVE_SHARED_ENTS" );

54 std::string readoptsLnd(

"PARALLEL=READ_PART;PARTITION=PARALLEL_PARTITION" );

61 int repartitioner_scheme = 0;

62 #ifdef MOAB_HAVE_ZOLTAN

63 repartitioner_scheme = 2;

66 MPI_Init( &argc, &argv );

72 std::string

atmFilename = TestDir +

"unittest/wholeATM_T.h5m";

87 #ifdef ENABLE_ATMOCN_COUPLING

88 std::string ocnFilename = TestDir +

"unittest/recMeshOcn.h5m";

89 std::string baseline = TestDir +

"unittest/baseline1.txt";

90 int rankInOcnComm = -1;

91 int cmpocn = 17, cplocn = 18,

95 #ifdef ENABLE_ATMCPLOCN_COUPLING

100 int rankInCouComm = -1;

113 opts.

addOpt< std::string >(

"atmosphere,t",

"atm mesh filename (source)", &

atmFilename );

114 #ifdef ENABLE_ATMOCN_COUPLING

115 opts.

addOpt< std::string >(

"ocean,m",

"ocean mesh filename (target)", &ocnFilename );

117 opts.

addOpt<

int >(

"startAtm,a",

"start task for atmosphere layout", &

startG1 );

118 opts.

addOpt<

int >(

"endAtm,b",

"end task for atmosphere layout", &

endG1 );

119 #ifdef ENABLE_ATMOCN_COUPLING

120 opts.

addOpt<

int >(

"startOcn,c",

"start task for ocean layout", &

startG2 );

121 opts.

addOpt<

int >(

"endOcn,d",

"end task for ocean layout", &

endG2 );

124 opts.

addOpt<

int >(

"startCoupler,g",

"start task for coupler layout", &startG4 );

125 opts.

addOpt<

int >(

"endCoupler,j",

"end task for coupler layout", &endG4 );

127 opts.

addOpt<

int >(

"partitioning,p",

"partitioning option for migration", &repartitioner_scheme );

130 opts.

addOpt<

int >(

"iterations,n",

"number of iterations for coupler", &n );

132 bool no_regression_test =

false;

133 opts.

addOpt<

void >(

"no_regression,r",

"do not do regression test against baseline 1", &no_regression_test );

141 #ifdef ENABLE_ATMOCN_COUPLING

142 "\n ocn file: " << ocnFilename <<

"\n on tasks : " <<

startG2 <<

":" <<

endG2 <<

144 "\n partitioning (0 trivial, 1 graph, 2 geometry) " << repartitioner_scheme <<

"\n ";

152 MPI_Group atmPEGroup;

155 CHECKIERR(

ierr,

"Cannot create atm MPI group and communicator " )

157 #ifdef ENABLE_ATMOCN_COUPLING

158 MPI_Group ocnPEGroup;

161 CHECKIERR(

ierr,

"Cannot create ocn MPI group and communicator " )

165 MPI_Group couPEGroup;

168 CHECKIERR(

ierr,

"Cannot create cpl MPI group and communicator " )

171 MPI_Group joinAtmCouGroup;

176 #ifdef ENABLE_ATMOCN_COUPLING

178 MPI_Group joinOcnCouGroup;

181 CHECKIERR(

ierr,

"Cannot create joint ocn cou communicator" )

187 int cmpAtmAppID = -1;

189 int cplAtmAppID = -1;

191 #ifdef ENABLE_ATMOCN_COUPLING

192 int cmpOcnAppID = -1;

194 int cplOcnAppID = -1, cplAtmOcnAppID = -1;

199 #ifdef ENABLE_ATMCPLOCN_COUPLING

200 int cplAtm2AppID = -1;

202 int cplAtm2OcnAppID = -1;

206 if( couComm != MPI_COMM_NULL )

208 MPI_Comm_rank( couComm, &rankInCouComm );

213 #ifdef ENABLE_ATMOCN_COUPLING

218 #ifdef ENABLE_ATMCPLOCN_COUPLING

221 CHECKIERR(

ierr,

"Cannot register second ATM over coupler PEs" )

225 if( atmComm != MPI_COMM_NULL )

232 #ifdef ENABLE_ATMOCN_COUPLING

233 if( ocnComm != MPI_COMM_NULL )

235 MPI_Comm_rank( ocnComm, &rankInOcnComm );

247 #ifdef ENABLE_ATMOCN_COUPLING

251 &couPEGroup, &ocnCouComm, ocnFilename,

readopts,

nghlay, repartitioner_scheme );

256 #ifdef ENABLE_ATMCPLOCN_COUPLING

258 if( atmComm != MPI_COMM_NULL )

261 ierr = iMOAB_SendMesh( cmpAtmPID, &atmCouComm, &couPEGroup, &cplatm2,

262 &repartitioner_scheme );

266 if( couComm != MPI_COMM_NULL )

268 ierr = iMOAB_ReceiveMesh( cplAtm2PID, &atmCouComm, &atmPEGroup,

274 if( atmComm != MPI_COMM_NULL )

276 int context_id = cplatm;

277 ierr = iMOAB_FreeSenderBuffers( cmpAtmPID, &context_id );

278 CHECKIERR(

ierr,

"cannot free buffers used to send atm mesh" )

281 if( couComm != MPI_COMM_NULL && 1 == n )

283 char outputFileLnd[] =

"recvAtm2.h5m";

285 CHECKIERR(

ierr,

"cannot write second atm mesh after receiving" )

292 #ifdef ENABLE_ATMOCN_COUPLING

293 if( couComm != MPI_COMM_NULL )

297 CHECKIERR(

ierr,

"Cannot register atm/ocn intersection over coupler pes " )

300 #ifdef ENABLE_ATMCPLOCN_COUPLING

301 if( couComm != MPI_COMM_NULL )

305 CHECKIERR(

ierr,

"Cannot register atm2/ocn intersection over coupler pes " )

309 int disc_orders[3] = { 4, 1, 1 };

310 const std::string weights_identifiers[2] = {

"scalar",

"scalar-pc" };

311 const std::string disc_methods[3] = {

"cgll",

"fv",

"pcloud" };

312 const std::string dof_tag_names[3] = {

"GLOBAL_DOFS",

"GLOBAL_ID",

"GLOBAL_ID" };

313 #ifdef ENABLE_ATMOCN_COUPLING

314 if( couComm != MPI_COMM_NULL )

316 PUSH_TIMER(

"Compute ATM-OCN mesh intersection" )

317 ierr = iMOAB_ComputeMeshIntersectionOnSphere( cplAtmPID, cplOcnPID, cplAtmOcnPID );

324 char prefix[] =

"intx_atmocn";

330 if( atmCouComm != MPI_COMM_NULL )

339 PUSH_TIMER(

"Compute OCN coverage graph for ATM mesh" )

340 ierr = iMOAB_CoverageGraph( &atmCouComm, cmpAtmPID, cplAtmPID, cplAtmOcnPID, &

cmpatm, &cplatm,

342 CHECKIERR(

ierr, "cannot recompute direct coverage graph for ocean" )

347 #ifdef ENABLE_ATMCPLOCN_COUPLING

348 if( couComm != MPI_COMM_NULL )

350 PUSH_TIMER(

"Compute ATM-OCN mesh intersection" )

351 ierr = iMOAB_ComputeMeshIntersectionOnSphere( cplAtm2PID, cplOcnPID, cplAtm2OcnPID );

355 CHECKIERR(

ierr, "cannot compute intersection for atm2/ocn" )

374 ierr = iMOAB_ComputeCommGraph( cplAtm2PID, cplAtm2OcnPID, &couComm, &couPEGroup, &couPEGroup, &type1, &type2,

375 &cplatm2, &atm2ocnid );

376 CHECKIERR(

ierr, "cannot recompute direct coverage graph for ocean from atm2" )

383 int fMonotoneTypeID = 0, fVolumetric = 0, fValidate = 0, fNoConserve = 0, fNoBubble = 1, fInverseDistanceMap = 0;

385 #ifdef ENABLE_ATMOCN_COUPLING

387 if( couComm != MPI_COMM_NULL && 1 == n )

389 char serialWriteOptions[] =

"";

390 std::stringstream outf;

391 outf <<

"intxAtmOcn_" << rankInCouComm <<

".h5m";

392 std::string intxfile = outf.str();

398 if( couComm != MPI_COMM_NULL )

400 PUSH_TIMER(

"Compute the projection weights with TempestRemap" )

401 ierr = iMOAB_ComputeScalarProjectionWeights( cplAtmOcnPID, weights_identifiers[0].c_str(),

402 disc_methods[0].c_str(), &disc_orders[0], disc_methods[1].c_str(),

403 &disc_orders[1],

nullptr, &fNoBubble, &fMonotoneTypeID,

404 &fVolumetric, &fInverseDistanceMap, &fNoConserve, &fValidate,

405 dof_tag_names[0].c_str(), dof_tag_names[1].c_str() );

406 CHECKIERR(

ierr, "cannot compute scalar projection weights" )

410 #ifdef MOAB_HAVE_NETCDF

412 const std::string atmocn_map_file_name =

"atm_ocn_map2.nc";

413 ierr = iMOAB_WriteMappingWeightsToFile( cplAtmOcnPID, weights_identifiers[0].c_str(),

414 atmocn_map_file_name.c_str() );

417 const std::string intx_from_file_identifier =

"map-from-file";

419 int dummy_rowcol = -1;

421 ierr = iMOAB_LoadMappingWeightsFromFile( cplAtmOcnPID, &dummyCpl, &dummy_rowcol, &dummyType,

422 intx_from_file_identifier.c_str(), atmocn_map_file_name.c_str() );

432 #ifdef ENABLE_ATMCPLOCN_COUPLING

433 if( couComm != MPI_COMM_NULL )

435 PUSH_TIMER(

"Compute the projection weights with TempestRemap for atm2/ocn" )

436 ierr = iMOAB_ComputeScalarProjectionWeights( cplAtm2OcnPID, weights_identifiers[0].c_str(),

437 disc_methods[0].c_str(), &disc_orders[0], disc_methods[1].c_str(),

438 &disc_orders[1],

nullptr, &fNoBubble, &fMonotoneTypeID,

439 &fVolumetric, &fInverseDistanceMap, &fNoConserve, &fValidate,

440 dof_tag_names[0].c_str(), dof_tag_names[1].c_str() );

441 CHECKIERR(

ierr, "cannot compute scalar projection weights for atm2/ocn" )

445 #ifdef MOAB_HAVE_NETCDF

447 const std::string atmocn_map_file_name =

"atm2_ocn_map.nc";

448 ierr = iMOAB_WriteMappingWeightsToFile( cplAtm2OcnPID, weights_identifiers[0].c_str(),

449 atmocn_map_file_name.c_str() );

452 const std::string intx_from_file_identifier =

"map2-from-file";

454 int dummy_rowcol = -1;

456 ierr = iMOAB_LoadMappingWeightsFromFile( cplAtm2OcnPID, &dummyCpl, &dummy_rowcol, &dummyType,

457 intx_from_file_identifier.c_str(), atmocn_map_file_name.c_str() );

466 int atmCompNDoFs = disc_orders[0] * disc_orders[0], ocnCompNDoFs = 1 ;

468 const char* bottomFields =

"a2oTbot:a2oUbot:a2oVbot";

469 const char* bottomProjectedFields =

"a2oTbot_proj:a2oUbot_proj:a2oVbot_proj";

470 const char* bottomSourceFields2 =

"a2oT2bot_src:a2oU2bot_src:a2oV2bot_src";

471 const char* bottomProjectedFields3 =

"a2oT2bot_proj:a2oU2bot_proj:a2oV2bot_proj";

473 if( couComm != MPI_COMM_NULL )

480 #ifdef ENABLE_ATMOCN_COUPLING

482 CHECKIERR(

ierr,

"failed to define the field tag a2oTbot_proj" );

484 #ifdef ENABLE_ATMCPLOCN_COUPLING

486 CHECKIERR(

ierr,

"failed to define the field tag a2oT2bot_proj" );

488 CHECKIERR(

ierr,

"failed to define the field tag a2oT2bot_proj" );

500 if( cplAtmAppID >= 0 )

502 int nverts[3], nelem[3], nblocks[3], nsbc[3], ndbc[3];

512 int numAllElem = nelem[2];

513 std::vector< double > vals;

514 int storLeng = atmCompNDoFs * numAllElem * 3;

517 vals.resize( storLeng );

518 for(

int k = 0; k < storLeng; k++ )

525 if( cplAtm2AppID >= 0 )

527 int nverts[3], nelem[3], nblocks[3], nsbc[3], ndbc[3];

537 int numAllElem = nelem[2];

538 std::vector< double > vals;

539 int storLeng = atmCompNDoFs * numAllElem * 3;

542 vals.resize( storLeng );

543 for(

int k = 0; k < storLeng; k++ )

553 for(

int iters = 0; iters < n; iters++ )

555 #ifdef ENABLE_ATMOCN_COUPLING

556 PUSH_TIMER(

"Send/receive data from atm component to coupler in ocn context" )

557 if( atmComm != MPI_COMM_NULL )

561 ierr = iMOAB_SendElementTag( cmpAtmPID, bottomFields, &atmCouComm, &cplocn );

564 if( couComm != MPI_COMM_NULL )

567 ierr = iMOAB_ReceiveElementTag( cplAtmPID, bottomFields, &atmCouComm, &cplocn );

573 if( atmComm != MPI_COMM_NULL )

575 ierr = iMOAB_FreeSenderBuffers( cmpAtmPID, &cplocn );

576 CHECKIERR(

ierr,

"cannot free buffers used to resend atm tag towards the coverage mesh" )

579 if( couComm != MPI_COMM_NULL && 1 == n )

582 char outputFileRecvd[] =

"recvAtmCoupOcn.h5m";

584 CHECKIERR(

ierr,

"could not write recvAtmCoupOcn.h5m to disk" )

588 if( couComm != MPI_COMM_NULL )

592 PUSH_TIMER(

"Apply Scalar projection weights" )

593 ierr = iMOAB_ApplyScalarProjectionWeights( cplAtmOcnPID, weights_identifiers[0].c_str(), bottomFields,

594 bottomProjectedFields );

595 CHECKIERR(

ierr, "failed to compute projection weight application" );

599 char outputFileTgt[] =

"fOcnOnCpl1.h5m";

606 if( ocnComm != MPI_COMM_NULL )

611 CHECKIERR(

ierr,

"failed to define the field tag for receiving back the tags "

612 "a2oTbot_proj, a2oUbot_proj, a2oVbot_proj on ocn pes" );

618 if( couComm != MPI_COMM_NULL )

622 ierr = iMOAB_SendElementTag( cplOcnPID, bottomProjectedFields, &ocnCouComm, &context_id );

623 CHECKIERR(

ierr,

"cannot send tag values back to ocean pes" )

627 if( ocnComm != MPI_COMM_NULL )

630 ierr = iMOAB_ReceiveElementTag( cmpOcnPID, bottomProjectedFields, &ocnCouComm, &context_id );

631 CHECKIERR(

ierr,

"cannot receive tag values from ocean mesh on coupler pes" )

636 if( couComm != MPI_COMM_NULL )

639 ierr = iMOAB_FreeSenderBuffers( cplOcnPID, &context_id );

640 CHECKIERR(

ierr,

"cannot free send/receive buffers for OCN context" )

642 if( ocnComm != MPI_COMM_NULL && 1 == n )

644 char outputFileOcn[] =

"OcnWithProj.h5m";

648 if( !no_regression_test )

653 int nverts[3], nelem[3];

656 std::vector< int > gidElems;

657 gidElems.resize( nelem[2] );

658 std::vector< double > tempElems;

659 tempElems.resize( nelem[2] );

661 const std::string GidStr =

"GLOBAL_ID";

672 check_baseline_file( baseline, gidElems, tempElems, 1.e-9, err_code );

674 std::cout <<

" passed baseline test atm2ocn on ocean task " << rankInOcnComm <<

"\n";

679 #ifdef ENABLE_ATMCPLOCN_COUPLING

680 PUSH_TIMER(

"Send/receive data from atm2 component to coupler of all data" )

681 if( atmComm != MPI_COMM_NULL )

685 ierr = iMOAB_SendElementTag( cmpAtmPID, bottomFields, &atmCouComm, &cplatm2 );

688 if( couComm != MPI_COMM_NULL )

691 ierr = iMOAB_ReceiveElementTag( cplAtm2PID, bottomFields, &atmCouComm, &

cmpatm );

697 if( atmComm != MPI_COMM_NULL )

699 ierr = iMOAB_FreeSenderBuffers( cmpAtmPID, &cplatm );

700 CHECKIERR(

ierr,

"cannot free buffers used to resend atm tag towards the coverage mesh" )

703 if( couComm != MPI_COMM_NULL && 1 == n )

705 char outputFileRecvd[] =

"recvAtm2CoupFull.h5m";

707 CHECKIERR(

ierr,

"could not write recvAtmCoupLnd.h5m to disk" )

711 PUSH_TIMER(

"Send/receive data from atm2 coupler to ocean coupler based on coverage data" )

712 if( couComm != MPI_COMM_NULL )

716 ierr = iMOAB_SendElementTag( cplAtm2PID, bottomFields, &couComm, &atm2ocnid );

721 ierr = iMOAB_ReceiveElementTag( cplAtm2OcnPID, bottomSourceFields2, &couComm, &cplatm2 );

727 if( couComm != MPI_COMM_NULL )

729 ierr = iMOAB_FreeSenderBuffers( cplAtm2PID, &atm2ocnid );

730 CHECKIERR(

ierr,

"cannot free buffers used to resend atm tag towards the coverage mesh" )

742 if( couComm != MPI_COMM_NULL )

746 PUSH_TIMER(

"Apply Scalar projection weights" )

747 ierr = iMOAB_ApplyScalarProjectionWeights( cplAtm2OcnPID, weights_identifiers[0].c_str(),

748 bottomSourceFields2, bottomProjectedFields3 );

749 CHECKIERR(

ierr, "failed to compute projection weight application" );

753 char outputFileTgt[] =

"fOcnOnCpl0.h5m";

755 CHECKIERR(

ierr,

"could not write the second fOcnOnCpl0.h5m to disk" )

759 std::cout <<

"applied scalar projection\n";

761 if( ocnComm != MPI_COMM_NULL )

766 CHECKIERR(

ierr,

"failed to define the field tag for receiving back the tags "

767 "a2oTbot_proj, a2oUbot_proj, a2oVbot_proj on ocn pes" );

769 std::cout <<

"defined tag agian on ocn\n";

774 if( couComm != MPI_COMM_NULL )

778 ierr = iMOAB_SendElementTag( cplOcnPID, bottomProjectedFields3, &ocnCouComm, &context_id );

779 CHECKIERR(

ierr,

"cannot send tag values back to ocean pes" )

781 std::cout <<

"sent ocn data from coupler to component\n";

784 if( ocnComm != MPI_COMM_NULL )

787 ierr = iMOAB_ReceiveElementTag( cmpOcnPID, bottomProjectedFields3, &ocnCouComm, &context_id );

788 CHECKIERR(

ierr,

"cannot receive tag values from ocean mesh on coupler pes" )

790 std::cout <<

"received ocn data from coupler to component\n";

794 if( couComm != MPI_COMM_NULL )

797 ierr = iMOAB_FreeSenderBuffers( cplOcnPID, &context_id );

798 CHECKIERR(

ierr,

"cannot free send/receive buffers for OCN context" )

800 std::cout <<

"freed send/recv ocn data from coupler to component\n";

801 if( ocnComm != MPI_COMM_NULL && 1 == n )

803 char outputFileOcn[] =

"Ocn2WithProj.h5m";

805 CHECKIERR(

ierr,

"could not write Ocn2WithProj.h5m to disk" )

807 if( !no_regression_test )

812 int nverts[3], nelem[3];

815 std::vector< int > gidElems;

816 gidElems.resize( nelem[2] );

817 std::vector< double > tempElems;

818 tempElems.resize( nelem[2] );

820 const std::string GidStr =

"GLOBAL_ID";

831 check_baseline_file( baseline, gidElems, tempElems, 1.e-9, err_code );

833 std::cout <<

" passed baseline test atm2ocn on ocean task " << rankInOcnComm <<

"\n";

836 std::cout <<

"wrote ocn data on component to disk\n";

841 #ifdef ENABLE_ATMCPLOCN_COUPLING

842 if( couComm != MPI_COMM_NULL )

849 #ifdef ENABLE_ATMOCN_COUPLING

850 if( couComm != MPI_COMM_NULL )

855 if( ocnComm != MPI_COMM_NULL )

862 if( atmComm != MPI_COMM_NULL )

868 #ifdef ENABLE_ATMOCN_COUPLING

869 if( couComm != MPI_COMM_NULL )

876 if( couComm != MPI_COMM_NULL )

887 if( MPI_COMM_NULL != atmCouComm ) MPI_Comm_free( &atmCouComm );

888 MPI_Group_free( &joinAtmCouGroup );

889 if( MPI_COMM_NULL != atmComm ) MPI_Comm_free( &atmComm );

891 #ifdef ENABLE_ATMOCN_COUPLING

892 if( MPI_COMM_NULL != ocnComm ) MPI_Comm_free( &ocnComm );

894 if( MPI_COMM_NULL != ocnCouComm ) MPI_Comm_free( &ocnCouComm );

895 MPI_Group_free( &joinOcnCouGroup );

898 if( MPI_COMM_NULL != couComm ) MPI_Comm_free( &couComm );

900 MPI_Group_free( &atmPEGroup );

901 #ifdef ENABLE_ATMOCN_COUPLING

902 MPI_Group_free( &ocnPEGroup );

904 MPI_Group_free( &couPEGroup );

905 MPI_Group_free( &

jgroup );

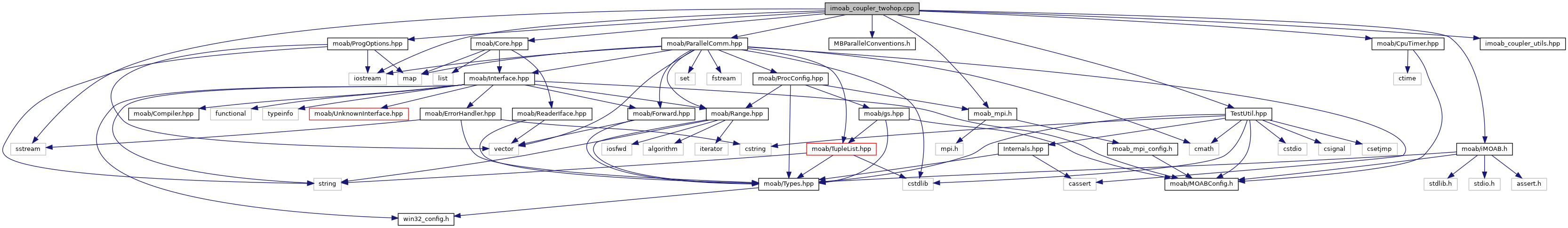

Include dependency graph for imoab_coupler_twohop.cpp:

Include dependency graph for imoab_coupler_twohop.cpp: