54 std::string

readopts(

"PARALLEL=READ_PART;PARTITION=PARALLEL_PARTITION;PARALLEL_RESOLVE_SHARED_ENTS" );

61 int repartitioner_scheme = 0;

62 #ifdef MOAB_HAVE_ZOLTAN

63 repartitioner_scheme = 2;

66 MPI_Init( &argc, &argv );

72 std::string

atmFilename = TestDir +

"unittest/atm_c2x.h5m";

87 #ifdef ENABLE_ATMOCN_COUPLING

88 std::string ocnFilename = TestDir +

"unittest/wholeOcn.h5m";

89 int rankInOcnComm = -1;

90 int cmpocn = 17, cplocn = 18,

94 int rankInCouComm = -1;

107 opts.

addOpt< std::string >(

"atmosphere,t",

"atm mesh filename (source)", &

atmFilename );

108 #ifdef ENABLE_ATMOCN_COUPLING

109 opts.

addOpt< std::string >(

"ocean,m",

"ocean mesh filename (target)", &ocnFilename );

110 std::string baseline = TestDir +

"unittest/baseline3.txt";

112 opts.

addOpt<

int >(

"startAtm,a",

"start task for atmosphere layout", &

startG1 );

113 opts.

addOpt<

int >(

"endAtm,b",

"end task for atmosphere layout", &

endG1 );

114 #ifdef ENABLE_ATMOCN_COUPLING

115 opts.

addOpt<

int >(

"startOcn,c",

"start task for ocean layout", &

startG2 );

116 opts.

addOpt<

int >(

"endOcn,d",

"end task for ocean layout", &

endG2 );

119 opts.

addOpt<

int >(

"startCoupler,g",

"start task for coupler layout", &startG4 );

120 opts.

addOpt<

int >(

"endCoupler,j",

"end task for coupler layout", &endG4 );

122 opts.

addOpt<

int >(

"partitioning,p",

"partitioning option for migration", &repartitioner_scheme );

124 bool no_regression_test =

false;

125 opts.

addOpt<

void >(

"no_regression,r",

"do not do regression test against baseline 3", &no_regression_test );

134 #ifdef ENABLE_ATMOCN_COUPLING

135 "\n ocn file: " << ocnFilename <<

"\n on tasks : " <<

startG2 <<

":" <<

endG2 <<

137 "\n partitioning (0 trivial, 1 graph, 2 geometry) " << repartitioner_scheme <<

"\n ";

145 MPI_Group atmPEGroup;

148 CHECKIERR(

ierr,

"Cannot create atm MPI group and communicator " )

150 #ifdef ENABLE_ATMOCN_COUPLING

151 MPI_Group ocnPEGroup;

154 CHECKIERR(

ierr,

"Cannot create ocn MPI group and communicator " )

158 MPI_Group couPEGroup;

161 CHECKIERR(

ierr,

"Cannot create cpl MPI group and communicator " )

164 MPI_Group joinAtmCouGroup;

169 #ifdef ENABLE_ATMOCN_COUPLING

171 MPI_Group joinOcnCouGroup;

174 CHECKIERR(

ierr,

"Cannot create joint ocn cou communicator" )

180 int cmpAtmAppID = -1;

182 int cplAtmAppID = -1;

184 #ifdef ENABLE_ATMOCN_COUPLING

185 int cmpOcnAppID = -1;

187 int cplOcnAppID = -1, cplAtmOcnAppID = -1;

192 if( couComm != MPI_COMM_NULL )

194 MPI_Comm_rank( couComm, &rankInCouComm );

199 #ifdef ENABLE_ATMOCN_COUPLING

206 if( atmComm != MPI_COMM_NULL )

213 #ifdef ENABLE_ATMOCN_COUPLING

214 if( ocnComm != MPI_COMM_NULL )

216 MPI_Comm_rank( ocnComm, &rankInOcnComm );

228 if( couComm != MPI_COMM_NULL )

230 char outputFileTgt3[] =

"recvAtmx.h5m";

236 #ifdef ENABLE_ATMOCN_COUPLING

240 &couPEGroup, &ocnCouComm, ocnFilename,

readopts,

nghlay, repartitioner_scheme );

249 #ifdef ENABLE_ATMOCN_COUPLING

250 if( couComm != MPI_COMM_NULL )

254 CHECKIERR(

ierr,

"Cannot register ocn_atm intx over coupler pes " )

258 int disc_orders[1] = { 1 };

259 const std::string weights_identifiers[1] = {

"bilinear" };

260 const std::string disc_methods[1] = {

"fv" };

261 const std::string dof_tag_names[1] = {

"GLOBAL_ID" };

262 const std::string method =

"bilin";

263 #ifdef ENABLE_ATMOCN_COUPLING

264 if( couComm != MPI_COMM_NULL )

266 PUSH_TIMER(

"Compute ATM-OCN mesh intersection" )

267 ierr = iMOAB_ComputeMeshIntersectionOnSphere( cplAtmPID, cplOcnPID, cplAtmOcnPID );

275 if( couComm != MPI_COMM_NULL )

283 ierr = iMOAB_ComputeCommGraph( cplAtmPID, cplAtmOcnPID, &couComm, &couPEGroup, &couPEGroup, &type1, &type2,

284 &cplatm, &atmocnid );

285 CHECKIERR(

ierr,

"cannot recompute direct coverage graph for ocean from atm" )

292 int fMonotoneTypeID = 0, fVolumetric = 0, fValidate = 0, fNoConserve = 0, fNoBubble = 1, fInverseDistanceMap = 0;

294 #ifdef ENABLE_ATMOCN_COUPLING

296 if( couComm != MPI_COMM_NULL )

298 PUSH_TIMER(

"Compute the projection weights with TempestRemap" )

299 ierr = iMOAB_ComputeScalarProjectionWeights( cplAtmOcnPID, weights_identifiers[0].c_str(),

300 disc_methods[0].c_str(), &disc_orders[0], disc_methods[0].c_str(),

301 &disc_orders[0], method.c_str(), &fNoBubble, &fMonotoneTypeID,

302 &fVolumetric, &fInverseDistanceMap, &fNoConserve, &fValidate,

303 dof_tag_names[0].c_str(), dof_tag_names[0].c_str() );

304 CHECKIERR(

ierr, "cannot compute scalar projection weights" )

308 #ifdef MOAB_HAVE_PNETCDF

310 std::stringstream outf;

311 outf <<

"atm_ocn_bilin_map_p" << endG4 - startG4 + 1 <<

".nc";

312 std::string mapfile = outf.str();

313 ierr = iMOAB_WriteMappingWeightsToFile( cplAtmOcnPID, weights_identifiers[0].c_str(), outf.str().c_str() );

325 int atmCompNDoFs = disc_orders[0] * disc_orders[0], ocnCompNDoFs = 1 ;

327 const char* bottomFields =

"Sa_dens:Sa_pbot";

328 const char* bottomProjectedFields =

"Sa_dens:Sa_pbot";

330 if( ocnComm != MPI_COMM_NULL )

334 CHECKIERR(

ierr,

"failed to define the field tag Sa_dens:Sa_pbot" );

337 if( couComm != MPI_COMM_NULL )

340 CHECKIERR(

ierr,

"failed to define the field tag Sa_dens:Sa_pbot" );

341 #ifdef ENABLE_ATMOCN_COUPLING

343 CHECKIERR(

ierr,

"failed to define the field tag Sa_dens:Sa_pbot" );

355 if( cplAtmAppID >= 0 )

357 int nverts[3], nelem[3], nblocks[3], nsbc[3], ndbc[3];

367 int numAllElem = nelem[2];

368 std::vector< double > vals;

369 int storLeng = atmCompNDoFs * numAllElem * 3;

372 vals.resize( storLeng );

373 for(

int k = 0; k < storLeng; k++ )

382 const char* concat_fieldname =

"Sa_dens:Sa_pbot";

383 const char* concat_fieldnameT =

"Sa_dens:Sa_pbot";

385 #ifdef ENABLE_ATMOCN_COUPLING

387 PUSH_TIMER(

"Send/receive data from atm component to coupler in atm context" )

388 if( atmComm != MPI_COMM_NULL )

392 ierr = iMOAB_SendElementTag( cmpAtmPID, bottomFields, &atmCouComm, &cplatm );

395 if( couComm != MPI_COMM_NULL )

398 ierr = iMOAB_ReceiveElementTag( cplAtmPID, bottomFields, &atmCouComm, &

cmpatm );

404 if( atmComm != MPI_COMM_NULL )

406 ierr = iMOAB_FreeSenderBuffers( cmpAtmPID, &cplatm );

407 CHECKIERR(

ierr,

"cannot free buffers used to resend atm tag towards the coverage mesh" )

415 if( couComm != MPI_COMM_NULL )

418 ierr = iMOAB_SendElementTag( cplAtmPID, bottomFields, &couComm, &atmocnid );

419 CHECKIERR(

ierr,

"cannot send tag values towards coverage mesh for bilinear map" )

421 ierr = iMOAB_ReceiveElementTag( cplAtmOcnPID, bottomFields, &couComm, &cplatm );

422 CHECKIERR(

ierr, "cannot receive tag values for bilinear map" )

424 ierr = iMOAB_FreeSenderBuffers( cplAtmPID, &atmocnid );

428 if( couComm != MPI_COMM_NULL )

432 PUSH_TIMER(

"Apply Scalar projection weights" )

433 ierr = iMOAB_ApplyScalarProjectionWeights( cplAtmOcnPID, weights_identifiers[0].c_str(), concat_fieldname,

435 CHECKIERR(

ierr, "failed to compute projection weight application" );

438 char outputFileTgt[] =

"fOcnBilinOnCpl.h5m";

444 if( couComm != MPI_COMM_NULL )

448 ierr = iMOAB_SendElementTag( cplOcnPID,

"Sa_dens:Sa_pbot", &ocnCouComm, &context_id );

449 CHECKIERR(

ierr,

"cannot send tag values back to ocean pes" )

453 if( ocnComm != MPI_COMM_NULL )

456 ierr = iMOAB_ReceiveElementTag( cmpOcnPID,

"Sa_dens:Sa_pbot", &ocnCouComm, &context_id );

457 CHECKIERR(

ierr,

"cannot receive tag values from ocean mesh on coupler pes" )

462 if( couComm != MPI_COMM_NULL )

465 ierr = iMOAB_FreeSenderBuffers( cplOcnPID, &context_id );

466 CHECKIERR(

ierr,

"cannot free send/receive buffers for OCN context" )

468 if( ocnComm != MPI_COMM_NULL )

470 char outputFileOcn[] =

"OcnWithProjBilin.h5m";

475 if( !no_regression_test && ( ocnComm != MPI_COMM_NULL ) )

480 int nverts[3], nelem[3];

483 std::vector< int > gidElems;

484 gidElems.resize( nelem[2] );

485 std::vector< double > tempElems;

486 tempElems.resize( nelem[2] );

488 const std::string GidStr =

"GLOBAL_ID";

508 check_baseline_file( baseline, gidElems, tempElems, 1.e-9, err_code );

509 if( 0 == err_code ) std::cout <<

" passed baseline test atm2ocn on ocean task " << rankInOcnComm <<

"\n";

514 #ifdef ENABLE_ATMOCN_COUPLING

515 if( couComm != MPI_COMM_NULL )

520 if( ocnComm != MPI_COMM_NULL )

527 if( atmComm != MPI_COMM_NULL )

533 #ifdef ENABLE_ATMOCN_COUPLING

534 if( couComm != MPI_COMM_NULL )

541 if( couComm != MPI_COMM_NULL )

552 if( MPI_COMM_NULL != atmCouComm ) MPI_Comm_free( &atmCouComm );

553 MPI_Group_free( &joinAtmCouGroup );

554 if( MPI_COMM_NULL != atmComm ) MPI_Comm_free( &atmComm );

556 #ifdef ENABLE_ATMOCN_COUPLING

557 if( MPI_COMM_NULL != ocnComm ) MPI_Comm_free( &ocnComm );

559 if( MPI_COMM_NULL != ocnCouComm ) MPI_Comm_free( &ocnCouComm );

560 MPI_Group_free( &joinOcnCouGroup );

563 if( MPI_COMM_NULL != couComm ) MPI_Comm_free( &couComm );

565 MPI_Group_free( &atmPEGroup );

566 #ifdef ENABLE_ATMOCN_COUPLING

567 MPI_Group_free( &ocnPEGroup );

570 MPI_Group_free( &couPEGroup );

571 MPI_Group_free( &

jgroup );

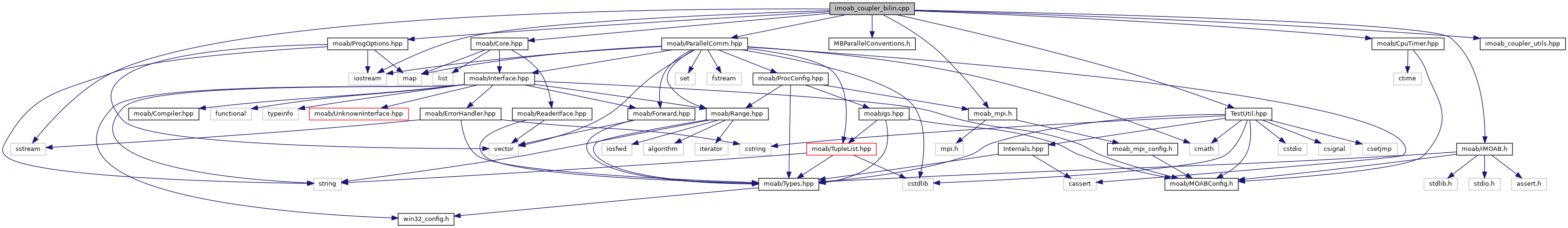

Include dependency graph for imoab_coupler_bilin.cpp:

Include dependency graph for imoab_coupler_bilin.cpp: